Adding caching

8th April 2024

Excitingly for me, but less so for this blog, I've been doing some actual programming for my day job. It's engaging stuff so I find myself spending longer at work and once I do get home my mind is still busy thinking about it. As a result, I'm not in the mood to work on Tinn and progress…well it stops. However, there have also been days when I've had to wear my manager hat and on those days I am eager to get home and do some programming. So in reality, progress has been made, and I’m overdue for an update.

Code compartmentalisation

I've previously written about the “blog module". The thing is I had not done anything special to separate the code into a module. C has no canonical meaning for “module" but what most people seem to mean is a design pattern using a header file to define a public interface and a C file with the implementation details. This is the pattern I followed, starting with the blog module, then creating modules for the buffer code I'd written, the server code, the client code and so on.

Separating code into modules like this offers a bunch of advantages. Code organisation for one. As the project grows, having the code in separate organised files can make it easier to find the code you are looking for. It can also be helpful when visualising how the program works. You can think at a high level about how the modules interact and then quite separately think about how each individual module works. It also helps avoid name clashes for the implementation details by defining “private" functions as static, this gives them file scope and so can only be seen and used within that implementation file.

It also has some disadvantages, the main one being the compilation processes. Up to now I'd been using the following command to compile Tinn:

gcc -o build/tinn src/tinn.c

After breaking the code into modules, I now need to list every source file:

gcc -o build/tinn src/tinn.c src/blog.c src/buffer.c src/client.c src/console.c src/net.c src/routes.c src/scanner.c src/server.c src/utils.c

It's cumbersome to type and it is going to keep growing as I add modules. I could write a shell script, but there are tools out there already for this. Namely GNU Make.

Make is a tool used to manage and automate builds, you populate a makefile that describes how to build your program and then let Make do the work. Out of the box it has some features that elevates it above a simple shell script, for example it only builds files it needs to. If a file has not been modified since it was last compiled, Make skips over that part of the build, reusing what it can and speeding up the whole process.

I'd been avoiding using Make because setting up the makefile was something additional I needed to learn. Eventually I decided to stop procrastinating and jump in. I read https://makefiletutorial.com and created a makefile using their cookbook for inspiration, which in turn was based on a blog post by Job Vranish.

# config

TARGET := tinn

RUN_ARGS := ../moohar/www

COMP_ARGS := -Wall -Wextra -std=c17 -pedantic

# dirs

BUILD := ./build

SRC := ./src

# build list of source files

SRCS := $(shell find $(SRC) -name "*.c")

# turn source file names into object file names

OBJS := $(SRCS:$(SRC)/%=$(BUILD)/tmp/%)

OBJS := $(OBJS:.c=.o)

# and dependacy file names

DEPS := $(OBJS:.o=.d)

# build list of sub directories in src

INC := $(shell find $(SRC) -type d)

INC_ARGS := $(addprefix -I,$(INC))

# short cuts

.PHONY: build run trace clean

build: $(BUILD)/$(TARGET)

run: build

@$(BUILD)/$(TARGET) $(RUN_ARGS)

trace: build

@$(BUILD)/$(TARGET) -v $(RUN_ARGS)

clean:

@rm -r $(BUILD)

# link .o objects into an executible

$(BUILD)/$(TARGET): $(OBJS)

@$(CC) $(COMP_ARGS) $(OBJS) -o $@

# complile .c source into .o object files

$(BUILD)/tmp/%.o: $(SRC)/%.c

@mkdir -p $(dir $@)

@$(CC) $(COMP_ARGS) -MMD -MP $(INC_ARGS) -c $< -o $@

# includes the .d makefiles we made when compiling .c source files

-include $(DEPS)This is a generic makefile that should work for most C projects and I shouldn't really need to touch it again. I imagine therefore that while I currently have a good handle on makefiles, I will now slowly forget the details of how they work.

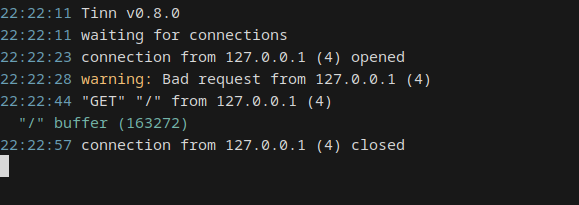

Console logging

While moving everything to modules, I took the time to improve the server’s logging and created a console module. This allows me to log things at five different levels. I went with trace, debug, info, warning and error. I then replaced all the various calls to print functions with calls to log to the console.

Why? Well it means I can provide some standard formatting, like prefixing the time to console output. It also sets me up for future enhancements. Currently all console output is just sent to the terminal (technically stdout and stderr). When I decide to send console output to a file as well, I now have a central place to do that.

Command line arguments

I set the default log output level to debug. This means that any trace logging gets silently ignored. I obviously wanted a way to change that setting for when I wanted to see the trace output, and preferably without recompiling the code. So I updated how the command line arguments work for Tinn. Now you can pass an additional -v argument which switches the program into verbose mode, aka enables trace output.

While I was there, I changed the other command line arguments too. Now the port and the content directory are optional, defaulting to 8080 and the current directory when not supplied.

usage: tinn [-v[erbose]] [-p port] [content_directory]

Macros

I used some preprocessor macros for each of the logging levels. I'd been avoiding macros up to this point for anything other than very simple constants because again it was something additional to learn. But, I'm not going to learn by avoiding the problem so I experimented. I flip flopped a bit with my approach. At one point the whole console module was basically marcos. Today, it consists of a mostly normal C function (console) with a number of parameters to control output and a number of macros to call that function with some convenient defaults, one macro for each logging level.

Arguably I could have achieved the same result with normal functions. I believe if I declared them as inline the end result after compilation would be indistinguishable. Some guidance I've read online suggests to do just that and avoid macros as much as possible.

The guidance online actually seems to span a wide range of opinions. On one end the advice is to avoid macros because technically they are manipulating the code and it is not always clear what the macro is doing or even when you are using a macro. This can lead to some hard to diagnose bugs. On the other end are people using macros to build some impressive things, like pseudo generics. I'm leaning towards the former opinion for now but will stick with the macros I've created so far to see what, if any, problems crop up.

Colours

To make it easier to distinguish the levels of the console output, I decided to add a splash of colour. Errors are red, warnings orange etc. This is achieved in the terminal with escape code sequences, for example outputting \x1B[31m changes the terminal colour to red and \x1B[0m resets the colour (and anything else changed).

While these codes look initially confusing they do have a pattern. They start with the escape character, the unprintable character you get when you press the [ESC] key at the top left of the keyboard. I’m assuming that's why they are called escape code sequences, they start with an escape. I think I first saw an escape code 26 years ago, today is the first time I’ve realised why they have that name. Anyway, the escape character is number 27 (in decimal) on the ASCII table, which is 33 in octal or 1B in hexadecimal. This is why in code you see escape sequences start with \033 or \x1B, that is how you “print" an escape.

This is then followed by an opening square bracket [. Together, \x1B[ is known as the CSI, which is not just a series of hit TV shows but also the Control Sequence Introducer. Next there is a bunch of parameter data made up of mostly numbers and then a single (normally) letter character which signifies the type of escape sequence. Escape sequences that end with an m are called SGR or Select Graphic Rendition, which lets us set display attributes, like colour. The parameter 31 will for example change the foreground colour to red. Putting all this together gives us the complete sequence \x1B[31m for switching the terminal to red.

The wiki article on ANSI escape sequences lists all the display attributes you can manipulate, including the colours.

Errors

Next I reviewed my error handling strategy and made some adjustments. I’ve classified errors into two broad groups: errors I can recover from and errors I can't. For example, I should expect and recover from errors while reading a request from a client. On the flip side, in most cases I've got no idea how to handle a failure to allocate memory. I need the memory and if I can't get it instead of trying to limp on it is probably safer to end the program.

To assist in this strategy I created an additional logging level, panic, and the appropriate macro which will first log the error message and then exit the program. In addition to being convenient, it means that if I decide to re-review this strategy to make the program more robust, I can search for all the places the PANIC macro is used.

I then took this one step further and created a new utility function allocate. This is essentially a wrapper for a call to realloc with a check for errors that makes a call to the PANIC macro if anything went wrong. It’s a small function, but really tidies up the rest of my code whenever I allocate memory, I don’t need to keep repeating the error handling and I can assume the allocation either worked or the program terminated.

Memory management

In my last post I cited a problem with how routes store paths, specifically how they manage the memory for the path. When the server responds to a client, it maps the incoming requested path to a resource on the server to respond with. This map is stored as a linked list of possible routes. The path in each route is simply a character pointer aka a pointer to a string. The route list code makes sure to allocate memory for each route node as it is added to the linked list and to free that memory if the list is discarded. However it takes no responsibility for allocating the memory for whatever the path pointer points to.

The routes_add_static function scans the content directory and creates a route for each file. This function allocates the memory for each path, stores that memory location in the route’s path pointer and then with its job done, the function ends. The problem being, no code would ever release that memory.

This isn't really a problem because in the program's lifetime the static routes are computed once, near the start of the program, and then required until the program ends. So it didn’t jump out at me as something I needed to rethink.

When coding the blog module I made a mistake which did highlight the issue. The blog_build function generates a page for each post and adds a new route for that post. The paths for each post are computed based on its name as defined in the posts file and stored in the posts_list structure which is created when reading the posts file. Once the blog_build function has done its work, the program no longer needs the posts list and so at the end of the function the posts list is cleaned up and all the memory it had allocated is freed. This is a problem because the paths in the routes list are still pointing at some of that memory.

One solution would be to have the blog_build function allocate new memory for each path and copy the value from the post list to that new string. The routes list would point to that newly allocated memory and it would be left alone forever. This is very similar to how the routes_add_static function works.

I couldn’t bring myself to do it though because it felt like I was digging myself a hole to fall into at a later date. For one I’m now allocating memory for the same thing (paths) in two different places. What if I later add a third? Additionally, if I were to update either the static roots or blog code to detect changes to the file system and update the routes list, I would have to remember when removing a route to make sure I also cleaned up the memory used to store the path.

I see this as a problem of ownership. To keep things manageable, whatever bit of code allocates memory should be responsible for cleaning it up later and whenever possible the management of any particular type of data (e.g. paths) should have a single owner. In this example I have two functions allocating memory for paths and neither exists long enough to take responsibility to clean up. It seems sensible to me to move the management of memory for paths to the code already managing the routes list. It’s a single place that can be used for both static files and blog posts, and it sticks around for long enough to clean up when required. So, this is what I did.

The code to add a new route still accepts string for the path but will now allocate its own memory for that path and copies over the value. The routes_free function now loops through all stored routes freeing the memory allocated for the paths before freeing the memory for the list nodes and the list itself. All the memory management for routes is now handled internally and any other code using the routes lists need not worry about it.

This change made the code in routes_add_static far simpler as a big chunk of that code was actually allocating memory for the paths. For the build_blog function, the big change was how the buffer that stores the generated blog page was managed. In a similar pattern to the path, I moved the responsibility of creating and releasing the buffer to the routes list. The routes_new_buf function now creates a new buffer, adds the route for it and returns the new buffer so it can be populated. The routes_free function recognises which routes have buffers and will free them as required.

I did experiment with the Rust programming language a few years ago. Ownership and lifetimes are a big part of Rust’s strategy to achieve safety and avoid this kind of problem. My strategy here is influenced by what I learnt during those experiments. While these checks are not built into the C language or compilers, I believe it still helps to think in these terms. And no, I’m not planning to switch to Rust, I’m having too much fun with C.

Scanner

I've been reading “Crafting Interpreters" by Robert Nystrom, it’s a rather good book on interpreter design and programming languages in general. I'm very much enjoying it. It's going to be really useful when I build a full interpreter for whatever scripting language I decide to support. I'm excited to give it a go.

Unfortunately I started to see every problem as requiring an interpreter and this led me down a bit of a hole with regards to parsing both the posts file and HTTP requests. While I could write a full interpreter to turn a HTTP request into an Abstract Syntax Tree, it would be overly complicated and unnecessary. All I really need at this stage is a simple scanner to tokenize the request for me.

My scanner code consists of two functions, scanner_new which sets up a new scanner by taking a pointer to a string and a maximum length. I did this so I can scan subsets of strings, or strings that are not null terminated. And, scan_token which takes a pointer to the scanner and string of delimiters and returns the next token. A token is defined as a simple structure with two properties, a pointer to the start of the string and its length.

I use this in the blog module to read the post file. In fact I use two scanners, one to read each line and one to break the line into the fields for each post. This code replaces the scanf call I used before. I admit the new code is longer but it has two main advantages which I place a higher value upon, first it doesn’t have any hard coded lengths and second it’s far easier to read and maintain.

I also used the scanner to read the incoming HTTP requests. My previous code, which was a bit of a mess, only read part of the first line of the request. I’m now able to read all the headers. Sort of. I can read the header name and its value, what I’m not doing is parsing that value as there are many different formats for header values. It is at this point I realised I didn’t actually care about any of them, which is odd because this had been my primary goal for this sprint.

Caching

I took a step back and realised beyond all the other improvements I’ve already discussed in this post, the new feature I was actually trying to add was to enable caching. As it stood, every time a client requests a page or resource from the server, it downloads a fresh copy. This is because I’ve done nothing to let the client cache responses and re-use them as appropriate. For example it would make sense for the client to keep and re-use a copy of the shared CSS file that is used on every page and rarely changes.

In my head I convinced myself I needed to read the request headers for caching to work which is why I was so focused on the scanner. Then I realised what I really needed to do was set some response headers, something I could already do. Specifically, I needed to set the Cache-Control header. If I set that to max-age=604800 it would tell the client it could cache the response for 604,800 seconds, AKA a week. I could have and should have implemented that a while ago.

It’s not necessarily the best type of caching though, it’s a little aggressive. If instead I set Cache-Control to no-cache, (confusingly) the server can still cache the response, but it must check each time that the cached version is still up-to-date before using it. Side note: no-cache is often confused with no-store, which is what you would use to prevent the client from keeping any copy of the response.

For no-cache to work the client needs to know when the resource was last modified. This means adding an additional response header, Last-Modified, with that date. Then when the client requests a resource it will include the request the header If-Modified-Since with the date its cached version was last modified. If the server’s version has not been modified since that date, the server can respond with a status code of 304 - Not Modified instead of the resource telling the client to use its cached version.

Which means I didn’t waste a load of time, the server does need to read the request headers. It needs to check for the If-Modified-Since header. I just needed to write a little extra code to convert the incoming header value from its Internet Message Format date to a time_t variable so I could make comparisons.

With that done, I now have a fairly good caching system and a few options open to me on how aggressive I implement that cache. So far I’ve enabled caching on the static files using the no-cache option. This was fairly easy using the file system’s last modified date. I still need to implement it for generated content as I have a minor issue working out what the last modified date should be, but that can wait till next time.

Summary

On reflection, I have made quite a bit of progress. Breaking the code up into modules, learning about Make and makefiles, sorting out the logging, experimenting with macros, reviewing my error strategy, fixing a memory management issue, building a tokenizer and enabling caching. I took a few wrong turns and got bogged down a little but I feel I’m in a good place again, ready to work on the next sprint.

TC